Welcome to Part II of the "Bit-Perfect" roundup for Windows.

In this installment, I'll focus on JPLAY (most recent version 5.1) which has been highly promoted around many of the audiophile web sites like 6moons, EnjoyTheMusic ("First Step to Heaven = JPLAY"!), High Fidelity (Polish), TNT-Audio, recently AudioStream plus all the hoopla around the terribly misleading series of articles on computer audio by Zeilig & Clawson in "The Absolute Sound" in early 2012.

If you browse around the JPLAY website (or hang out on various computer audiophile forums), you run into suggestions that process priority, memory playback, task switching latency, driver type (eg. ASIO, WASAPI, Kernel Streaming) all have some kind of effect on the playback audio quality (usual digital whipping boy for bad sound quality is of course the dreaded jitter). Sure, computers can be complicated, but is it possible for these factors to affect audio output significantly enough to hear and common enough to worry about with a modern USB DAC like in my test set-up? Maybe, but like many subtle things in life "reported" to be true, it's hard to know with confidence unless systematically tested. To address these "issues" JPLAY even has a mode where the screen gets turned off, OS processes are halted, drivers turned off, etc. the famed "hibernate mode". Since the keyboard is turned off, the computer comes back to life after the music has completed playing or if you remove a USB stick during playback! Realize that this makes the computer even less interactive than a disc spinner!

Mitchco has already used the DiffMaker program to compare JPLAY and JRiver about a year ago but was not able to detect a difference. Likewise, there have been some heated discussions over on Hydrogen Audio regarding this program.

Well, let us put this program and various of its settings on the test bench and see what comes up...

Firstly, the setup - same as Part I:

ASUS Taichi (*running JPLAY/foobar*) Win8 x64 --> shielded USB (Belkin Gold) --> TEAC UD-501 DAC --> shielded 6' RCA --> E-MU 0404USB --> shielded USB --> Win8 Acer laptop

Remember that JPLAY is essentially a "playback engine" using its own buffering algorithm and runs as an Audio Service under Windows. In this regard, it is quite unique. By itself, it has a very bare-bones text-based interface. Therefore, for ease of testing, I'm going to be using JPLAY through foobar2000 as an ASIO output "device". Within JPLAY settings, one can then specify the actual audio device and which driver to use (eg. ASIO, WASAPI, Kernel Streaming...), along with specific parameters like buffer size. There are four "engines" - Beach, River, Xtream (Kernel Streaming only), and ULTRAstream (Kernel Streaming & Windows 8 only) - I am unaware of how they are supposed to differ. Note that for all these tests, I had "Throttle" turned ON which is supposed to increase priority to JPLAY (and hence diminish task priority of other processes). Volume control was turned OFF. According to the manual, there are even "advanced settings" called DriverBuffer, UltraSize, and XtreamSize which I did not bother playing with since I presume they're deemed less important if one has to go into the Registry to adjust. Thankfully, the trial version will play ~2 minutes of uninterrupted audio before inserting ~5 seconds of silence. This is enough time to measure the audio quality using my standard tests.

I. RightMark 6.2.5 (PCM 16/44 & 24/96)

Let us start with JPLAY using the ASIO & WASAPI drivers. I think it is important to remember that the makers of JPLAY recommend using Kernel Streaming instead. My suspicion is that JPLAY essentially just passes information off to these ASIO and WASAPI drivers so logically there would be little reason to believe measurements & sound quality should change at all. Furthermore, the CPU utilization for the process "jplay.exe" with ASIO and WASAPI remained low during playback - on the order of <2% peak and usually <1% using the i5 processor. As we see later, this dramatically changes with Kernel Streaming where the program can actually get closer to the hardware and do direct processing in "kernel mode".Here's the data for JPLAY at 16/44 with ASIO and the two "engines" that support ASIO - "Beach" and "River". When you see a number like "ASIO 2", that refers to the buffer setting (number of samples). The software recommends using the smallest buffer size with "DirectLink" being 1 sample. I was not able to get the DirectLink setting working without occasional blips and errors once I started doing Kernel Streaming, but 2 works well for 16/44, 4 was good for 24/96 so I standardized on those settings throughout. As you can see, I also measured with buffer of 64 samples to see if that made any difference (I see from the manual that Xtreme only works for buffer sizes up to 32 samples - it didn't complain when I set it to 64):

Note that I used the standard foobar2000 + ASIO audio output as the comparison measurement in the leftmost column.

Frequency Response:

Noise:

THD:

Stereo Crosstalk:

For some reason, WASAPI refused to initiate for me at 16/44. But it did work at 24/96, so I have included that here as well. Again, foobar + ASIO was used for comparison on the left:

Frequency Response:

Noise:

THD:

Stereo Crosstalk:

So far, no real difference between the JPLAY playback and foobar2000.

Now, lets deal with Kernel Streaming. It is with KS that we see significant CPU utilization by "jplay.exe". Instead of <2% peak CPU utilization above, with low buffers like a setting of 2 samples for 16/44, I see peak CPU utilization of 13%, and with a 4-sample buffer at 24/96, peak CPU jumps to 16% with my laptop's i5 and the "Xtream" engine. The "ULTRAstream" engine chews up even more CPU cycles by another 1-2% above those previous numbers. The moment the buffer size was increased to 64 samples, CPU utilization dropped to 3% with 16/44 and 6% with 24/96 peak. It looks like only when Kernel Streaming is used, does JPLAY actually kick in to maintain those tiny buffer settings.

Starting with 16/44, here's Kernel Streaming with mainly the "Xtream" and "ULTRAstream" engines since these two cannot be used with ASIO. I included "Beach" in these measurements as well out of interest. The test on the far right with "hiber" refers to the use of the "hibernate mode" where the computer screen, OS processes, drives, etc. turn off during playback. Again, foobar + ASIO was used as the comparison:

Frequency Response:

Noise:

THD:

Stereo Crosstalk:

Here is 24/96:

Frequency Response:

Noise:

THD:

Stereo Crosstalk:

So far, despite all the changes in CPU utilization, different audio "engines" and buffer settings, I see no substantial change in the measurements that would suggest a qualitative difference in terms of the analogue output signal from my DAC.

JPLAY supposedly can support DSD playback. I did not test this function.

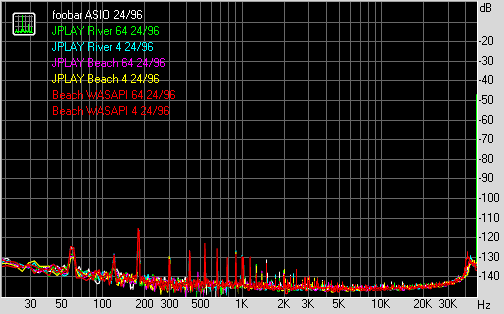

Part II: Dunn J-Test for jitter

I did a whole suite of J-Test with all the different audio "engines", either 2 or 64 sample buffers, ASIO/WASAPI/KS, also tried the "hibernate" mode. For brevity, here's a selection of 16-bit jitter spectra using various settings:foobar2000 ASIO:

Beach ASIO 64-sample buffer:

River ASIO 2-sample buffer:

Xtream Kernel Streaming 64-sample buffer:

ULTRAstream Kernel Streaming 2-sample buffer:

ULTRAstream Kernel Streaming + Hibernate 2-sample buffer:

Essentially no difference in the J-Test spectra.

Recall that the 24-bit Dunn J-Test is done with a 24/48 signal. While doing this test using Kernel Streaming mode, something strange was found.

This is what the 24-bit Dunn J-Test looks like with foobar2000 + ASIO (notice primary signal at 12kHz rather than 11kHz with the 16-bit test):

The 24-bit LSB modulation signal is buried under the noise level. This is quite normal.

Here is Beach + ASIO:

Again, quite normal - we're still using the TEAC ASIO driver.

Look what happens with 24-bit J-Test Beach + Kernel Streaming (doesn't matter what buffer size):

Eh? What's with all the modulation signal bursting through like with the 16-bit test?!

My suspicion is that JPLAY isn't handling the last 8-bits in the 24-bit data properly... One possible scenario is where the last 8 bits got flipped from LSB to MSB, thus causing the LSB signal to show through at a higher level. With RightMark, this is what it looks like:

We've "lost" the last 7 bits of resolution at 24/48 with Kernel Streaming causing the dynamic range to drop to 102dB (17-bits resolution) instead of the usual >110dB (24-bits into the noise floor). I considered the possibility that this may represent some sort of dithering but why would it be applied to 24/48 and not 24/96?

Strangely, this anomaly did not show up at 24/96. I did not bother to check if 88/176/192kHz sampling rates were affected.

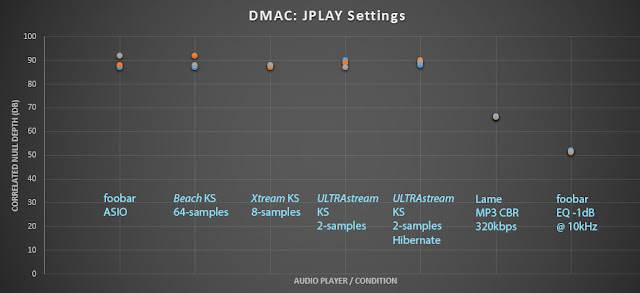

Part III: DMAC Protocol

Time for the machine listening test of 24/44 composite music using Audio DiffMaker. As shown in previous blog posts, this test seems to be quite good in detecting even relatively small changes like minute EQ adjustments, difference between AAC, MP3, etc... This test is also similar to what mitchco did last year.Here are measurements of a few settings in JPLAY in comparison to foobar ASIO as reference. I included the most "extreme" one that I could perform - ULTRAstream + 2-sample buffer + hibernate mode. As usual a couple of "sensitivity tests" with MP3 320kbps and slight EQ change in foobar for comparison:

The machine was able to correlate the null depth down to around 90dB with all the JPLAY settings and foobar suggesting no significant difference in sound in comparison to the reference foobar + ASIO playback.

Part IV: Conclusion

Based on these measurements, a few observations:1. 24/48 with Kernel Streaming appears buggy as shown with the 24-bit J-Test and RightMark result. Don't use it (as of version 5.1) if you want accurate sound. However, subjectively it still sounds OK to me since it's still accurate down to 16-bits at least. ASIO works fine. 24/96 is fine. I don't know if other sampling rates with 24-bit data are affected. For some reason I could not get WASAPI 16/44 to work with JPLAY even though it was fine with foobar2000.

2. Technically, JPLAY appears to be bit perfect with 16/44 and likely 24/96 based on my tests (of course we cannot say this for 24/48). Since the program claims to be bit perfect, this is good I guess.

3. I was unable to detect any evidence of sonic difference at 16/44 and 24/96 compared to a standard foobar set-up. RightMark tests look essentially the same. Over the months of testing, I see no evidence still that software changes the jitter severity with CPU load, different software, even different DACs (as I had postulated awhile back in this post). DiffMaker null tests were also unable to detect any significant difference in the "sound" of the analogue output from the TEAC UD-501.

4. Still no evidence that extreme settings like "hibernate mode" which reduces the utility of the computer makes any sonic difference. Of course, it's possible that this could make a difference with some very slow machines like a 1st generation single-core Atom processor with small buffer settings doing Kernel Streaming... But in that case, why not just increase the buffer size with Kernel Streaming (why all the "need" for low buffer settings and obsession over low latency for just playback?!) or just go with an efficient ASIO/WASAPI driver? I'd also recommend a processor upgrade if you're still rocking an old Atom!

Over the 3 nights I was performing these tests, I took time to listen to music with the various JPLAY settings - probably ~3 hours total. It sounds good subjectively (other than the brief interruptions every 2 minutes or so for the trial version). The i5 computer shows a little bit of lag with Kernel Streaming and low buffer size if I'm trying to do something else. I listened to Donald Fagen's The Nightfly and Peter Gabriel's So (2012 remaster) in 24/48 with Kernel Streaming and didn't think they sounded bad despite the issue I found (remember, the anomaly was down below 16-bits). Dire Strait's Brother In Arms sounded dynamic and detailed as usual. Likewise Richard Hickox & LSO's Orff: Carmina Burana (2008, 24/88 SACD rip) sounds just as ominous. (Almost) Instantaneous A-B'ing is possible in foobar changing ASIO output from the TEAC driver to JPLAY and I did not notice any significant subjective tonal, amplitude, or resolution difference using my Sennheiser HD800 headphones with the TEAC UD-501 DAC flipping back and forth a number of times.

Bottom line: With a reasonably standard set-up as described, using a current-generation (2013) asynchronous USB DAC, there appears to be no benefit with the use of JPLAY over any of the standard bit-perfect Windows players tested previously in terms of measured sonic output. Nor could I say that subjectively I heard a difference through the headphones. If anything, one is subjected to potential bugs like the 24/48 issue (I didn't run into any system instability thankfully), and the recommended Kernel Streaming mode utilizes significant CPU resources when buffer size is reduced (which the software recommends doing). I imagine that CPU utilization would be even higher if I could have activated the DirectLink (1-sample buffer) setting.

Finally, there's the fact that this program costs €99. A bit steep ain't it? JRiver costs US$50, Decibel on the Mac around $35, foobar2000 FREE and these all feature graphical user interfaces and playlists at least! Considering my findings, I'm unclear with what DAC or computer system one would find tangible benefits after spending €99 for this program.

As usual, I welcome feedback especially with objective data or controlled test results (any JPLAY software developers care to comment on how the audio engines were "tuned"?). I would also welcome independent testing to see if my findings can be verified on other hardware combinations (especially that 24/48 issue).

Music selection tonight: Paavo Järvi & Orchestre de Paris - Fauré: Messe de Requiem (2011). Lovely rendition of Pavane for mixed choir!

As usual... Enjoy the tunes... :-)

0 comments:

Post a Comment